The errors, or rather defects in the jargon, that a static analysis tools produce can be considered low cost well formed bug reports available very early in the development process.

When I say low cost it is because they can be found by a machine without a user or fellow developer wasting their time finding them. Well formed comes because the machine can describe exactly how it came to the logical deduction leading to the defect.

Introduction

Static analysis is in general terms using the computer to examine a program for logic errors beyond those of pure syntax before it is executed. Examining a running program for defects is known as dynamic program analysis and while a powerful tool in its own right is not the topic of discussion.This analysis has historically been confined to compiled languages as their compilers already had the Abstract Syntax Tree (AST) of the code available for analysis. As an example the C language (released in 1972) had the lint tool (released in 1979) based on the PCC compiler.

Practical early compilers (I am generalising here as the 19070s were a time of white hot innovation in computing and examples of just about any innovation in the field could probably be found) were pretty primitive and produced executables which were less good than hand written assembler output. Due to practical constraints the progress of optimising compilers was not as rapid as might be desired so static analysis was largely used as an external process.

Before progressing I ought to explain why I just mixed the concept of an optimising compiler and static analysis. The act of optimisation within those compilers requires program analysis, from which they can generate defect reports which we all know and love as compiler warnings, also explaining why many warnings only appear at higher optimisation levels where deeper analysis is required.

The attentive reader may now enquire as to why we would need external analysis tools when our compilers already perform the task. The answer stems from the issue that a compiler is trying to reconcile many desirable traits including:

- Produce correct (expected) output from the source code for the target processor

- Produce output which will execute using the smallest amount of resources possible (not just CPU time but memory access and cache usage)

- Generate output in a reasonable amount of time.

- Have a reasonable cost (both developer time and research into new methods) to implement the compiler itself.

- Produce useful diagnostics

The slow progress in creating optimising compilers initially centred around the problem of getting the compiled output in a reasonable time to allow for a practical edit-compile-run-debug cycle although the issues more recently have moved more towards the compiler implementation costs.

Because the output generation time is still a significant factor compilers limit the level of static analysis performed to that strictly required to produce good output. In standard operation optimising compilers do not do the extended analysis necessary to find all the defects that might be detectable.

An example: compiling one 200,000 line C program with the clang (v3.3) compiler producing x86 instruction binaries at optimisation level 2 takes 70 seconds but using the clang based scan-build static analysis tool took 517 seconds or more than seven times as long.

Using static analysis

As already described warnings are a by-product of an optimising compilers analysis and most good programmers will endeavour to remove all warnings from a project. Thus almost all programmers are already using static analysis to some degree.

The external analysis tools available can produce many more defect reports than the compiler alone as long as the developer is prepared to wait for the output. Because of this delay static analysis is often done outside the usual developers cycle and often integrated into a projects Continuous Integration (CI) system.

The resulting defects are usually presented as annotated source code with a numbered list of logical steps which shows how the defect can present. For example the steps might highlight where a line of code allocates memory from the heap and then an exit path where no reference to the allocated memory is kept resulting in a resource leak.

The level of erroneous defect reports varies depending on the codebase being analysed and how good the analysis tool being used is. It is not uncommon to see false positive rates, even with the best tools, in excess of 10%

Good tools allow for this and provide ways to supply additional context through model files or hints in the source code to suppress the incorrect defect reports. This is analogous to using asserts to explicitly constrain variable values or a type cast to suppress a type warning.

Even once the false positives have been dealt with there comes the problem of defects which while they may be theoretically possible take so many steps to achieve that their probability is remote at best. These defects are often better categorized as a missing constraint and the better analysis tools generate fewer than the more naive implementations.

An issue with some defect reports is that often defects will appear in a small number of modules within programs, generally where the developers already know the code is of poor quality, thus not adding useful knowledge about a project.

As with all code quality tools static analysis can be helpful but is not a panacea code may be completely defect free but still fail to function correctly.

Defect Density

A term that is often used as a metric for code quality is the defect density. This is nothing more than the ratio of defect to thousands of lines of code e.g. a defect density of 0.9 means that there is approximately one defect found in every 1100 lines of code.The often quoted industry average defect density value is 1, as with all software metrics this can be a useful indicator but should not be used without understanding.

The value will be affected by improvements in the tool as well as how lines of code are counted so is exceptionally susceptible to gaming and long term trends must be treated with scepticism.

Practical examples

I have integrated two distinct static analysis tools into the development workflow for the NetSurf project which I shall present as case studies. These examples show a good open source solution and a commercial offering highlighting the issues with each.Several other solutions, both open source and commercial, exist many of which have been examined and discarded as either impractical or proving less useful than those selected. However the investigation was not comprehensive and only considered what was practical for the project at the time.

clang

The clang project is a frontend to the LLVM project providing an optimising compiler for the C, C++ and objective C languages. As part of this project the compiler has been enhanced to run a collection of "checkers" which implement various methods of analysis on the code being compiled.The "scan-build" tool is provided to make the using these features straightforward. This tool generates defect reports as a series of html files which show the analysis results.

Because the scan-build takes in excess of eight minutes on powerful hardware the NetSurf developers are not going to run this tool themselves as a matter of course. To get the useful output without the downsides it was decided to integrate the scan into the CI system code quality checks.

Whenever a git commit happens to the mainline branch and the standard check build completes successfully on all target architectures the scan is performed and the results are published as a list of defects.

The list is accessible directly through the CI interface and also incorporates a trend graph showing how many defects were detected in each build.

Each defect listed has a detail link which reveals the full analysis and logic necessary to cause the defect to occur.

Unfortunately even NetSurf which is a relatively small piece of software (around 200,000 lines of code at time of writing) causes 107 defects to be emitted by scan-build.

All but 25 of the defects are however "Dead Store" where the code has a value assigned but is never checked. These errors are simply not interesting to the developers and are occurring in code generated by a tool.

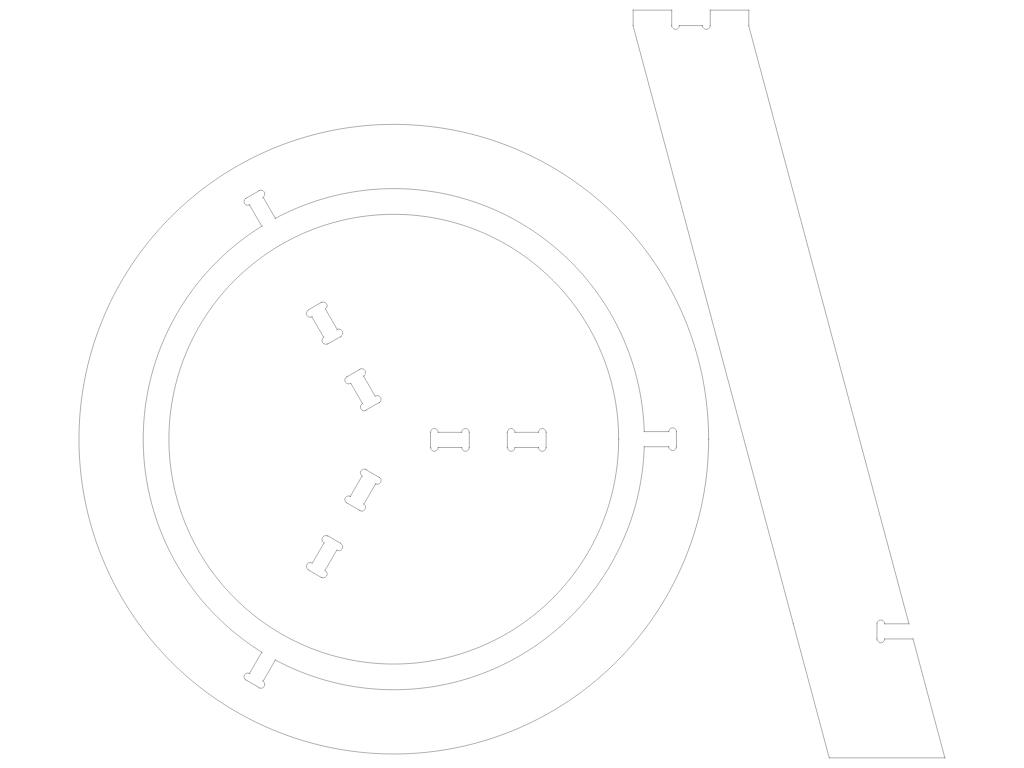

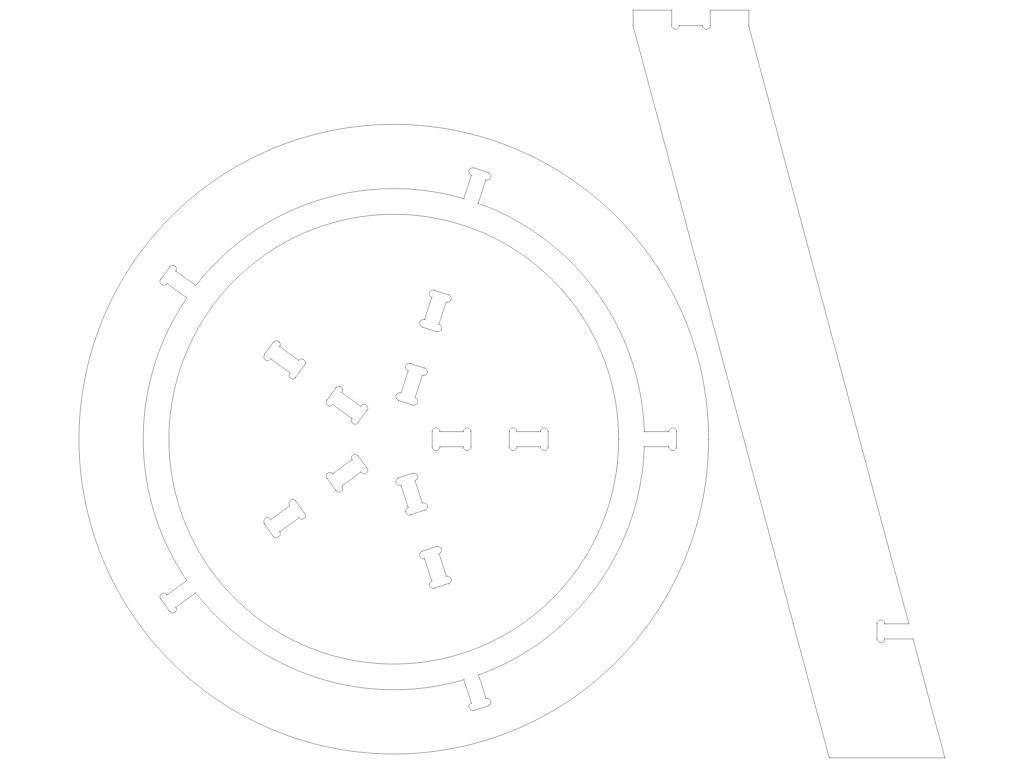

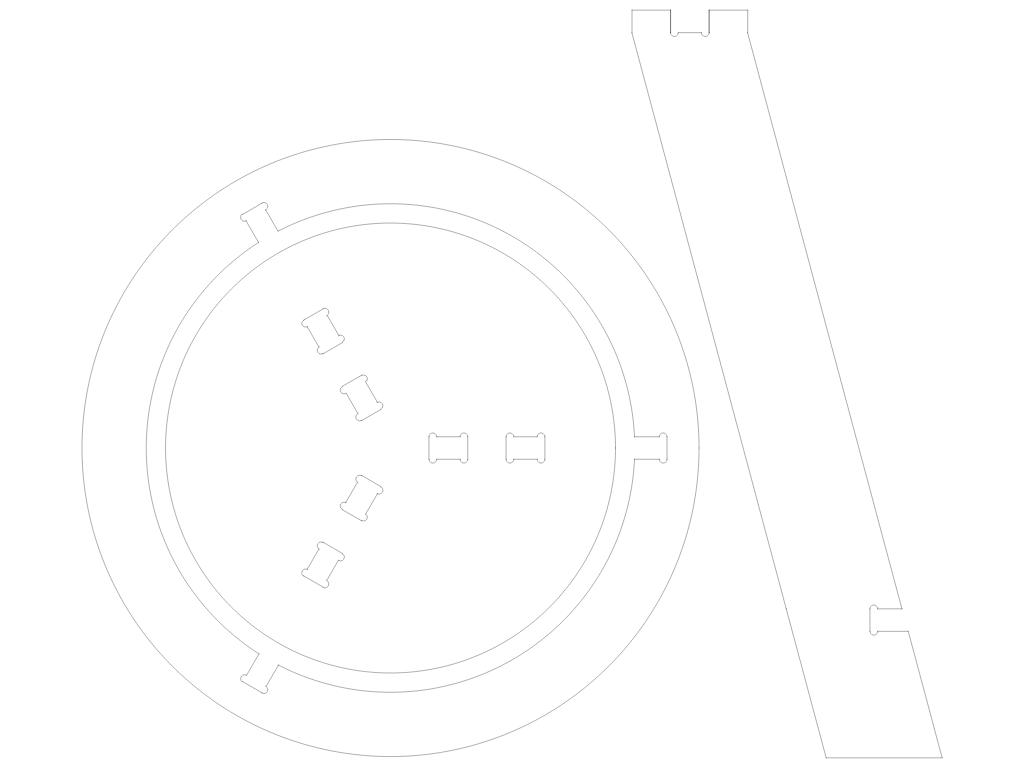

Of the remaining defects identified the majority are false positives and several (like the example in the image above) are simply improbable requiring a large number of steps to reach.

This shows up the main problem with the scan-build tool in that there is no way to suppress certain checks, mark defects as erroneous or avoid false positives using a model file. This reduces the usefulness of these builds because the developers all need to remember that this list of defects is not relevant.

Most of the NetSurf developers know that the project currently has 107 outstanding issues and if a code change or tool improvement were to change that value we have to manually work through the defect list one by one to check what had changed.

Coverity

The coverity SAVE tool is a commercial offering from a company founded in the Computer Systems Laboratory at Stanford University in Palo Alto, California. The results of the original novel research has produced a good solution which improved on analysis tools previously available.The company hosts a gratis service for open source projects, they even provide scans for the Linux kernel so project size does not appear to be an issue.

The challenges faced integrating the coverity tool into the build process differed from clang however the issue of execution time remained and the CI service was used.

The coverity scanning tool is a binary executable which collects data on the build which is then submitted to the coverity service to be analysed. This tool obviously relies upon the developer running the executable to trust coverity to some degree.

A basic examination of the binary was performed and determined the executable was not establishing network connections or performing and observably undesirable behaviour. From this investigation the decision was made that running the tool inside a sandbox environment on a CI build slave was safe. The CI system also submits the collected results in a compressed form directly to the coverity scan service.

Care must be taken to only submit builds according to the services Acceptable Use Policy which limits the submission frequency of NetSurf scans to every other day. To ensure the project stays within the rules the build performed by the CI system is manually controlled and confined to a subset of NetSurf developers.

The results are presented using the coverity connect web technology based defect management tool. Access to the coverity connect interface is controlled by a user management system which precludes publicly publishing the results within the CI system.

The results are presented using the coverity connect web technology based defect management tool. Access to the coverity connect interface is controlled by a user management system which precludes publicly publishing the results within the CI system.Unfortunately NetSurf itself does not currently have good enough JavaScript DOM bindings to support this interface so another browser must be used to view it.

Despite the drawbacks the quality of the analysis results is greatly superior to the clang solution. The false positive rate is very low while finding many real issues which had not been previously detected.

The analysis can be enhanced by use of collection configuration and modelling files which remove intended constructions from consideration reducing the false positive rate to very low levels. The ability to easily and persistently suppress false positives through the web interface is also available.

The false positive management capabilities coupled with a user interface that makes understanding the defect path simple make this solution very practical and indeed the NetSurf developers have removed over 50 actual issues within a relatively short period since the introduction of the tool.

Not all of those defects could be considered serious but they had the effect of encouraging deeper inspection of some very dubious smelling source.

Conclusions

The principle conclusions of implementing and using static analysis have been:- It is a powerful tool which aids programmers in improving their software.

- It is not a panacea and bad code can have no defects.

- It can suggest possible defects early in the development cycle.

- It can highlight possibly problematic areas well before they affect a programs users.

- The tool and the infrastructure around it have a large impact on the usefulness of the results.

- The way results are presented has disproportionately significant impact on the usability of the defect reports.

- The open source tools are good, and improving, but coverity currently provides a superior experience.

- Integration into a projects CI system is beneficial.

When I started looking at this technology I was somewhat dubious about its usefulness but I have definitely changed my mind. It is a useful addition to any non-trivial project and the return on time and effort should be repaid handsomely in all but already perfect code (if you believe you have such code I have a bridge to sell you).

![Isaac Newton by Sir Godfrey Kneller [Public domain], via Wikimedia Commons Isaac Newton by Sir Godfrey Kneller [Public domain], via Wikimedia Commons](http://upload.wikimedia.org/wikipedia/commons/3/39/GodfreyKneller-IsaacNewton-1689.jpg)